Facebook announced two changes today that it hopes will make it easier to staunch the spread of fake news. The first change is to the News Feed, where users will no longer see “Disputed Flags,” or red badges displayed under articles flagged by Facebook’s third-party fact-checkers. Instead, they will see Related Articles, or links to content from reputable publishers. The second change is a new initiative to help Facebook understand how people judge the accuracy of information based the news sources they use, which won’t result in any immediate changes to the News Feed, but is meant to help the company gauge how well its efforts to stop the spread of misinformation are working.

Along with Google and Twitter, Facebook is currently under pressure by critics who say it hasn’t done enough to combat fake news on its platform, including articles by “troll farms” that disseminate misinformation to make a profit or sway public opinion on politics and other hot-button issues. The issue became more urgent during the presidential election and all three companies have been called to testify in congressional hearings over how their platforms were used by Russian-backed trolls to influence U.S. politics.

Almost exactly one year ago, Facebook implemented several changes to fight fake news, including easier steps to report articles, partnerships with fact-checking organizations and features, like Disputed Flags, that alert people when they are about to read or share articles that have identified by fact-checkers as fake news. Facebook also started demoting fake news links, which it says usually mean they lose 80 percent of their traffic.

In today’s announcement, Facebook product manager Tessa Lyons said Facebook decided to replace Disputed Flags with Related Articles because the red badges actually had the effect of reinforcing beliefs.

“Academic research on correcting misinformation has shown that putting a strong image, like a red flag, next to an article may actually entrench deeply held beliefs—the opposite effect to what we intended,” Lyons wrote. “Related Articles, by contrast, are simply designed to give more context, which our research has shown is a more effective way to help people get to the facts. Indeed, we’ve found that when we show Related Articles next to a false news story, it leads to fewer shares than when the Disputed Flag is shown.”

Launched in 2013, Related Articles are what Facebook calls the links it displays on News Feeds after users finish reading an article. Related Articles were originally created to boost engagement and prevent people’s News Feeds from being flooded with silly memes by directing them to content from reputable publishers instead. Then in April of this year, Facebook announced a test that showed Related Articles before articles about trending topics, with the intent of giving users “easier access to additional perspectives and information.”

Another blog post written by the team leading Facebook’s efforts against fake news–product designer Jeff Smith, user experience researcher Grace Jackson and content strategist Seetha Raj–gives more insight into today’s announcement. Over the past year, the team says they visited different countries to conduct research into how misinformation spreads in different contexts and how people react to “designs meant to inform them that what they are reading is fake news.”

As a result, they identified four major ways the Disputed Flags feature could be improved.

First, the team wrote, Disputed Flags need to tell people immediately why fact-checkers dispute an article, because most users won’t bother clicking on links to additional information. Second, strong language or images like a red flag sometimes backfire by reinforcing beliefs, even if they are marked as false. Third, Facebook only applied Disputed Flags after two fact-checking organizations had determined it was false, but that meant it sometimes did not act quickly enough, especially in countries with very few fact-checkers.

Finally, some of Facebook’s fact-checking partners rated articles on a scale (for example, “false,” “partly false,” “unproven” or “true”), so context and nuance was lost when a Disputed Flag was applied, especially on rare occasions when two organizations fact-checked the same article but came to different conclusions about its credibility.

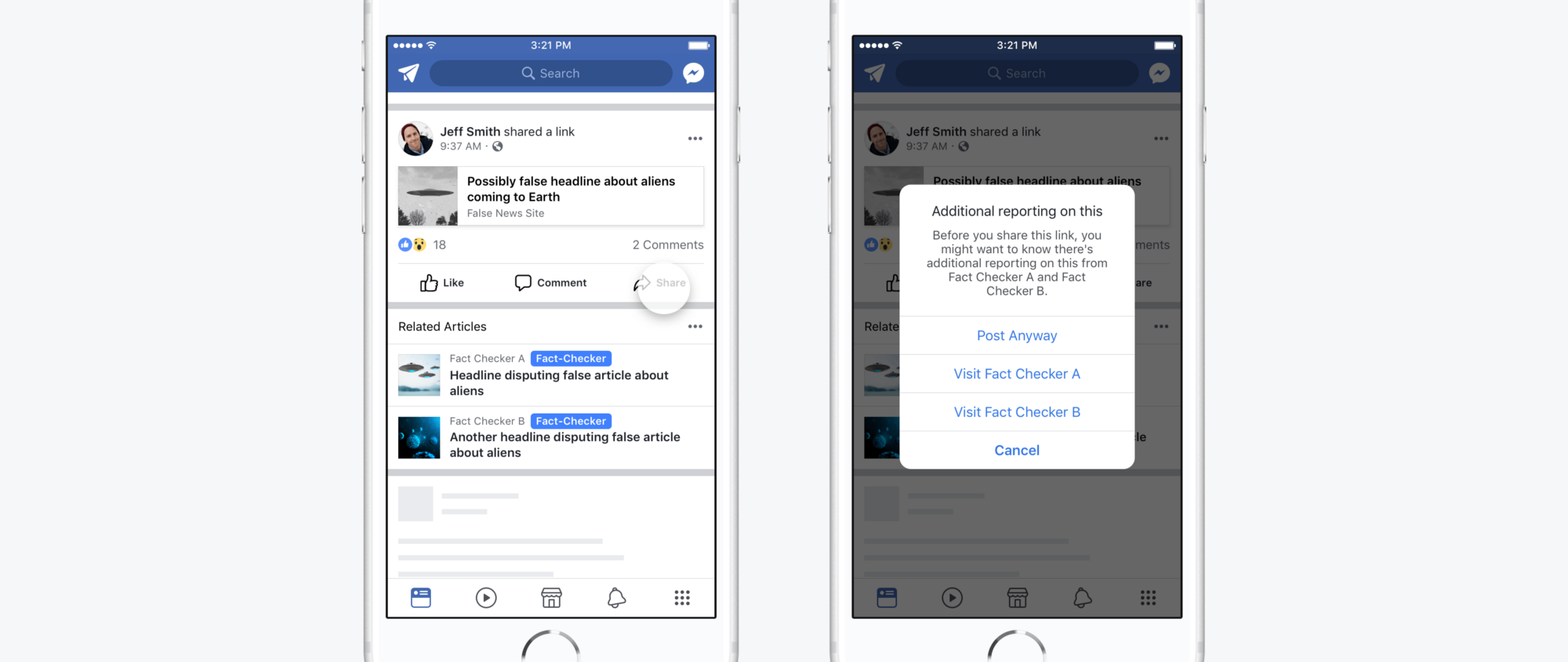

Displaying Related Articles before someone clicks on a link is meant to address all of those issues by making it easier to get context, requiring only one fact-checker’s review, working even for on articles that got different ratings and preventing the kind of reaction that might cause someone to dig in their heels about a belief, even if it is wrong.

Furthermore, even though the new application of Related Articles doesn’t “meaningfully change” clickthrough rates, Facebook’s anti-fake news team says it leads to fewer shares. In a bid to increase transparency, users will also now see badges that identify which fact-checkers reviewed an article.

“As some of the people behind this product, designing solutions that support news readers is a responsiblity we take seriously,” wrote Smith, Jackson and Raj. “We will continue working hard on these efforts by testing new treatments, improving existing treatments and collaborating with academic experts on this complicated misinformation problem.”