Google today announced the launch of version 0.8 of TensorFlow, its open source library for doing the hard computation work that makes machine learning possible. Normally, a small point update like this wouldn’t be all that interesting, but with this version, TensorFlow can now run the training processes for building machine learning models across hundreds of machines in parallel.

This means training complex models using TensorFlow won’t take days or even weeks, but often only hours.

The company says distributed computing has long been one of the most requested features for TensorFlow and with this, Google is essentially making the technology that powers much of its recently announced hosted Google Cloud Machine Learning platform available to all developers.

Google says it’s using the gRPC library to manage all of these machines. The company is also launching a distributed trainer for the Inception image classification neural network that uses its Kubernetes container management service to scale up the processing to hundreds of machines and GPUs. As part of this release, Google is also adding a new library for building these distributed models to TensorFlow.

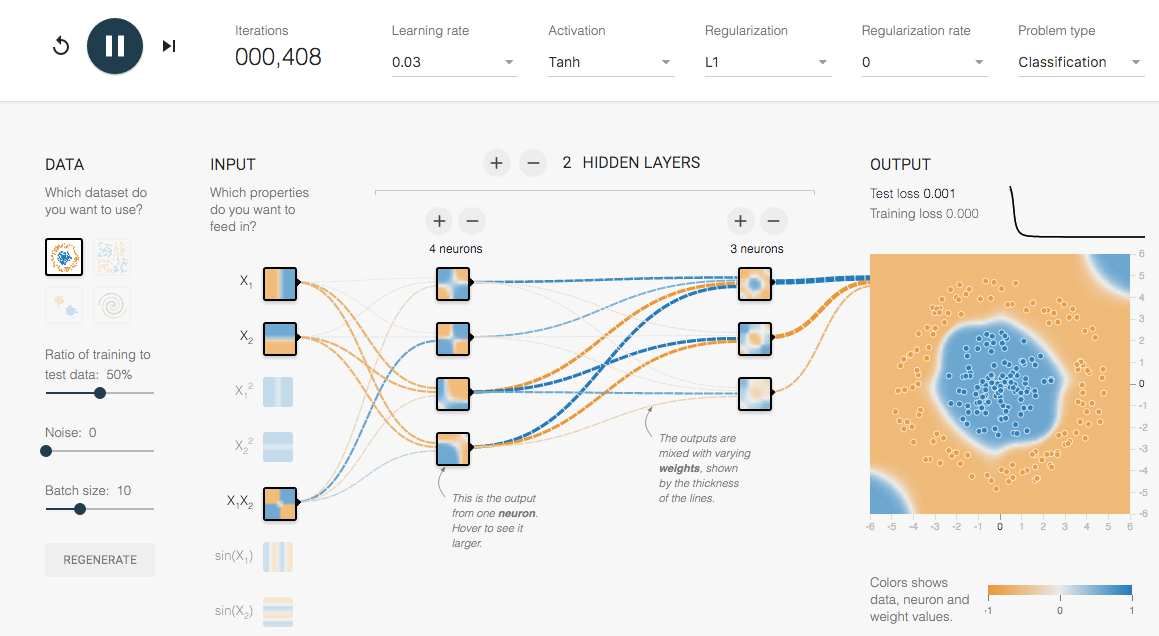

If you want to play with TensorFlow without having to go through what is still a pretty complex setup process, Google also offers a browser-based simulator that lets you experiment with a basic TensorFlow setup and deep learning.