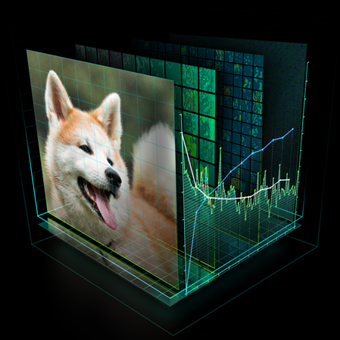

The sophisticated neural networks underlying systems like Google’s Deep Dream and all manner of interesting experiments require a great deal of computing power. NVIDIA proposes to put all that horsepower in a single box, specially engineered to meet the needs of AI researchers.

NVIDIA already has GPUs specializing in deep learning applications, so this was a logical next step. It’s called the DGX-1, and it’s basically a fancy enclosure for an 8-GPU supercomputing cluster. Not that there’s anything wrong with that. There are 8 Tesla P100 cards in there with 16 GB of RAM each, plus 7 TB of storage for all the raw data you’ll be training your networks on. And there’s something called a NVLink Hybrid Cube Mesh, but I’m pretty sure that’s made up.

There’s built-in neural network training software, though many researchers and companies will want to roll their own solutions. The DGX-1 is happy to crunch data either way. A standardized platform like this takes the guesswork out of building a system, and having a big name like NVIDIA providing support and regular updates is a nice way to justify the extra cost to your budget people.

Still not clear on what exactly a deep learning system or neural network is? The short version is that they are programs that simulate human-like thought processes by looking very closely at a huge set of data and noting similarities and differences on multiple levels of organization.

The result is a system that can quickly and effectively perform tasks like image analysis and object or pattern recognition. It’s still fairly new even to people in the AI and computer science field, so don’t feel bad that you didn’t know or that I’m bad at explaining.

Systems like Deep Dream will run on regular CPUs and the like, of course, but as with so many other data-intensive computing tasks, parallel processing beats serial any day of the week. GPUs are already massively parallelized, having to handle huge amounts of data under extremely strict time constraints, so they’re a great match for supercomputing rigs. 8 Teslas in parallel is nothing to sneeze at (they produce 170 teraflops), and while you could rent time on a cloud cluster with more raw power, there’s a lot to be said for running your own hardware in-house. (Though you’ll probably be relying on NVIDIA for troubleshooting and maintenance.)

You’re in for some sticker shock, though: the DGX-1 costs $129,000. No one said the future was going to be cheap!