Nvidia’s new Tegra X1 mobile chipset is a veritable beast: It’s able to provide almost two times the graphics performance of the iPad Air 2’s A8X while also consuming just about the same amount of power, and it’s already in production, meaning tablets sporting the X1’s graphical prowess should be available to consumers in the relatively near future.

The Tegra X1’s benchmarks suggest a future where we’ll see tablets come even closer to approximating the gaming capabilities of full desktop computers. X1 is based on Maxwell, after all, the microacrchitecture used in the most recent GeForce GTX desktop graphics cards, meaning just like the K1 and Kepler before it, X1 makes it easy for developers to use desktop gaming APIs to bring their products to mobile with far fewer intricacies involved.

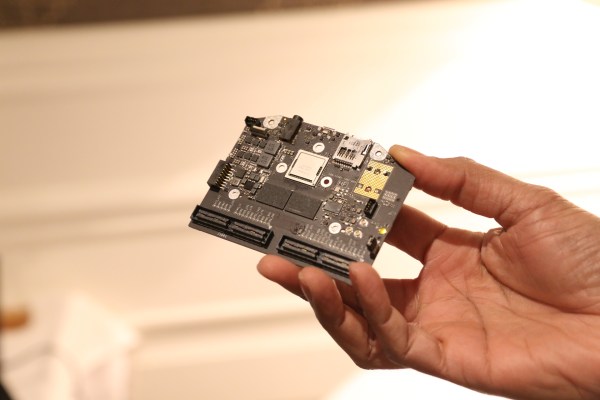

[gallery ids="1100303,1100304,1100305,1100306,1100307,1100308,1100310,1100312,1100313,1100314,1100315,1100318,1100319,1100321"]

X1 features a 265-core Maxwell GPU, an 8-core 64-bit ARM CPU and can handle 60fps 4K UHD video playback in either H.265 or VP9 coding. Like the Apple-designed A8X, it uses a 20nm process, which means it’s fairly easy to benchmark it against the Apple tablet in a straight-up graphics benchmark comparison. Nvidia obliged us with just such a test, using a range of benchmarks to illustrate exactly how it compares not only to the iPad, but to the current, Tegra K1-powered Nvidia Shield Tablet, which owns the graphics performance crown among Android slates.

Nvidia’s own tests with the X1 show it performing at just about twice the rate of the Tegra K1 when it comes to 1080p offscreen performance, about 1.5x in 3Dmark 1.3 Icestorm Unlimited and almost twice the iPad Air 2 in the same metric, and about 1.5x in the BasemarkX 1.1 vs. both iPad and K1. But perhaps its most impressive performance improvements over comparable chipsets revolve around energy efficiency: The X1 offers about twice the performance as you increase power draw vs. the K1 architecture, and about 1.7x the performance of the A8X on average for the same power consumption.

Nvidia Tegra X1 vs. A8X energy efficiency – X1 is the blue line.

The work that Nvidia is doing on mobile graphics and chipsets has tremendous impact in terms of potential for Netflix, as well as other content providers that are pushing the boundaries with streaming 4K video. 60fps is especially important to action and sports content, and to video games of course. But being able to playback video at that resolution has benefits for emerging technologies, like virtual reality, which can improve dramatically by playing ever-higher resolution at speeds that offer smooth and seamless framerates for up-close and personal viewing.

A side-by-side demo of what the Tegra X1 can handle vs. the competition revealed that it can output to 4K capable displays at a buttery smooth 60fps, which makes 30 seem almost staccato by comparison. Of course, this means having a 4K, 60fps source to begin with, which isn’t currently that easy to come by – recently-introduced devices, including the GoPro Hero 4 Black, which shoots at exactly that resolution and framerate, mean we’ll probably have a lot more content coming soon to make that more relevant to a wider swath of the population.

Of course, as the tweet above illustrates, in order to really impress, Nvidia has to make sure there’s software to take advantage of the power it provides, and in the Android world, that’s not always easy – especially because of its tendency towards fragmentation, often the target specs developers are designing for are designed to hit the wide and not-so-lofty middle section of users, meaning pushing graphical and other computation limits to their max doesn’t enter into their application designs.

Still, that’s a big part of why Nvidia has developed its own platforms, including the Shield line of devices, and it’s likely they’ll have announcements in that realm soon now that the X1 is in production. Along with new hardware will come optimized software, too, so users won’t have long to wait before they get their first taste of wha the X1 means to actual lived computing.