Last month Apple confirmed the acquisition of Israeli motion-sensing company PrimeSense. Initial thoughts turned towards applications in the Apple TV set-top box, but other reports that Apple was interested in its ‘mapping’ capabilities as well.

After some digging and asking around, it seems like there might be more than meets the eye with this particular acquisition. Apple could very well be interested in incorporating PrimeSense technology into its TV offerings, in order to recognize gestures for interaction. But when Apple purchases companies it often has both short and long-term goals in mind for their technology. The purchase of Authentec and its touch identification technology in 2012 is an example of Apple bringing acquired technology to market after a period of acceleration and refinement. Something similar could be in the works for PrimeSense.

Specifically, we’re hearing from our sources that Apple was likely interested in a new mobile chipset PrimeSense was developing that would eventually be suited for devices like iPhones and iPads. These chips and accompanying sensor and software tech could be used for a variety of purposes like identity recognition and indoor space mapping.

But in order to understand where Apple is coming from and where this tech might be going in the future, we have to chat for a minute about perceptive computing.

A Brief Primer On Perceptive Computing

Perceptive, or ‘perceptual’ computing is a relatively new field that’s all about capturing and analyzing data with sensors and visualization engines like cameras and infrared light. The purpose is to create a computer simulation that mimics human insight or intuition in a variety of areas — one of which is visualizing a three-dimensional space in a way that’s impossible for traditional cameras.

This field of study is related to natural user interfaces, which will allow humans to interact with computers in a more human way.

The field is actually quite a bit broader than this, but those are the components of it that are important to us. One of the primary proponents of this kind if perceptive computing has been Intel, who has an entire group devoted to exploring this kind of computer visualization to make computers ‘aware’ of their surroundings. The purpose? To enable something called ‘intent-based computing’.

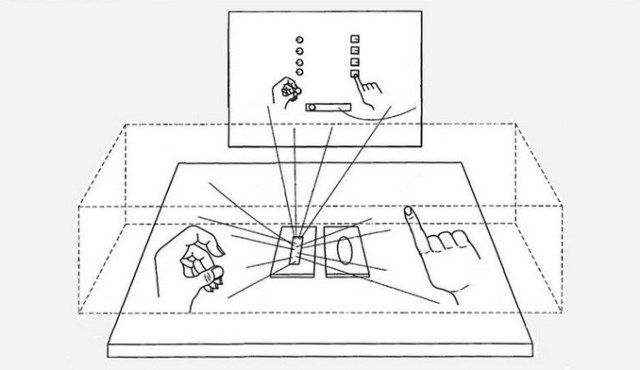

Through a combination of voice, facial recognition, gesture recognition and awareness of signals like depth and 3D space, perceptive computing will allow us to interact with computers in a way that goes far beyond ’2D gestures’. Though touch screens have dominated the world of computer interaction over the last 6 years since the introduction of the iPhone, they’re just the tip of the iceberg when it comes to moving beyond the ‘mouse and keyboard’ model of computer control.

Imagine, for instance, if your smartphone were to be able to react to not just voice commands, but also the location where they were issued, the identity of the person issuing them and other contextual signals like what apps you have installed and what activities you happen to be involved in at the time. If you issue a command like “shop for groceries” but are looking at a family member and not your computer, the command could be ignored. And there are also security benefits that come with deeper facial recognition powers. If it’s not you using your phone, the interface could be set to a guest mode or locked entirely.

A recent product of Intel’s open Perceptual Computing SDK was a new kind of “visual-musical” instrument called JOY (PDF). It uses hand gestures (and eventually facial recognition) to create visual and audio effects. Here’s a video of it in action:

The privacy implications are enough to make one shiver, but the applications are powerful enough for companies to invest hundreds of millions just to explore the possibilities. This is going to happen, whether we like it or not, and anyone in the mobile market has to have some skin in this game. As a note, Intel acquired (another Israeli) 3D gesture company Omek Interactive earlier this year.

That’s why you’ll see companies like Amazon experiment with smartphones that have multiple cameras for facial tracking, 3D object recognition and more.

There is a lot more to perceptive computing than just ‘3D motion sensor tracking’, as applicable to a product like the Apple TV, for instance. Which is where PrimeSense comes in.

PrimeSense

PrimeSense began as a company that made 3D sensing software and camera systems from off-the-shelf-ish parts. It then made a huge bet on itself by choosing not to just license its system to manufacturers but to also become a silicon company. That’s an insane market to just waltz into, and it takes real conviction that you’ve got something special to do that. Silicon is expensive, difficult to manufacture and relies on a limited number of foundries to produce.

But that’s just what they did, and it paid off with a big partnership deal with Mircrosoft for the original Kinect. Originally dubbed Project Natal, the device was a much refined and polished version of PrimeSense’s original cobbled-together 3D sensor that garnered initial attention from investors and eventually Microsoft incubation director Alex Kipman.

After the Microsoft deal, PrimeSense didn’t stand still, and made arrangements to supply sensors to other companies like Y-Combinator startup Matterport, Qualcomm’s Vuforia gaming platform and 3D scanner Occipital. Matterport is a hardware company that sells cameras which can capture interiors in their entirety, mapping out objects and architecture quickly and cleanly.

We spoke to Matterport CEO Bill Brown about why they chose PrimeSense for inclusion in their systems. He said that there were several attractive things about the Carmine 3D sensor that made sense for Matterport. First of all, it was an active system, not a passive stereo system. This meant that visible light wasn’t an issue, and Matterport could combine multiple sensors into one camera to create complex models of environments that included both 3D imagery and 2D texture info, which allowed them to create accurate representations quickly, with use of their software. Brown also notes that PrimeSense had an aggressive cost structure which made it easy to buy the needed silicon and camera systems whole, from one manufacturer, rather than parting it all out.

Brown didn’t share any details about whether Matterport would need to move away from the PrimeSense platform now, but said they’re obviously keeping an eye on the situation.

PrimeSense uses a structured infrared light system to map a grid of invisible dots onto a scene. Those dots are read by the included sensor and combined with images from a digital camera sensor to craft a map of the scene that includes depth. This method is sometimes referred to as ‘RGB-D’, because it produces a traditional red, green and blue image plus ‘depth’ information.

Other technologies in this space include the plain old passive stereoscopic method, which involves two or more cameras collecting visible light from separate angles. The most popular at the moment, however, is based on ‘time of flight’ or TOF information. This kind of system measures the time it takes light to reach an object and bounce back to an emitting point in order to calculate depth. It’s the system that Microsoft uses in the Kinect 2.0, included with the Xbox One.

You’ll notice that the technology PrimeSense uses and the kind that the new Kinect uses are different. This is because, several years ago, Microsoft began acquiring companies to build their own version of what PrimeSense was selling them.

Perceptive computing generally requires a couple of components; software to detect and parse 3D information and software to detect and parse gestures. Microsoft bought Canesta, a 3D sensing company, in 2010 to take care of the first aspect and 3DV, a gesture-detecting 3D vision company also based in Israel, for the other component.

We don’t have any inside info on why PrimeSense sold to Apple, though the price has been rumored to be around $350M.

Before it was acquired, PrimeSense had produced an embedded system it called Capri, that was small enough to potentially be included in tablets or a set-top box. It was essentially a compact version of what its original sensors did, with a host of accuracy improvements.It was, however, not nearly small enough for phone use.

PrimeSense VP of Marketing Tal Dagan told Engadget that Capri was the ‘future of PrimeSense’. “Not only is it smaller, lower-power and cheaper, it also has better depth, better middleware that can actually run on a mobile processor… our end goal is to make it small enough to make it into every consumer device.”

He also offered up a couple of examples of how those sensors might be used:

“Instead of having to measure my daughter’s height every two weeks, I could just take a picture of her with my smartphone, and it’ll automatically know she’s grown by a few inches based on her profile and previous height.” He offered other potential ideas like a portable gaming device that utilizes motion gestures, a car-docked handset that sounds an alarm when you’re nodding off to sleep mid-drive or simply the ability to scan an object for a 3D printer.

However, we’ve heard that PrimeSense has actually been hard at work developing another, even more compact and power sensitive, system that could eventually be put to work in smartphone applications.

That project, we understand, is nowhere near ready to ship so we wouldn’t expect to see it in any Apple devices for a couple of years. But, when it does show up, what would Apple want to do with it?

The Next Frontier Of Sensors

Your current smartphone has a bunch of sensors inside. Likely a couple of accelerometers, a gyroscope and a GPS system for location. Even the local radios are used for location data and whatnot. They provide a ton of contextual information about where your device, and therefore you, are and what you are doing together.

But, so far, there has been little advancement in the way of sensing the world outside of the device. The three-dimensional space that surrounds the phone, including the user’s face and body. That’s what adding a 3D sensor to a smartphone will give you, additional contextual information that can implement the next wave of ‘intent based computing’.

“I think it is safe to assume Apple is looking to experiment with the question: what does a world look like when our device can see and hear us,” says Creative Strategies Analyst and Techpinions columnist Ben Bajarin. There is simply too much information still left on the table.

Apple has filed patents that involve customizing a phone’s interface depending on the identity of the user, as detected by sensors that see in 3D. Recognizing the user could add an additional layer of security and personalization to your device.

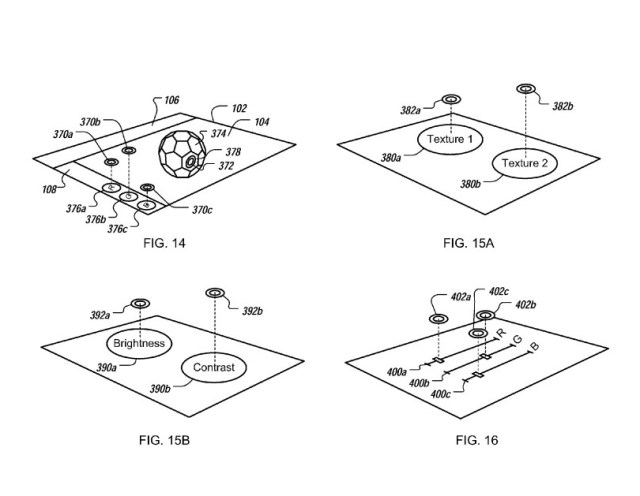

This kind of system could also be used to create new kinds of gestures that involve the ‘z’ or depth axis. Apple has filed patents for exactly that kind of application, where a user could lift their fingers off the screen to exert varied control over the interface.

Those possibilities could also extend to mapping interior spaces, something reporter Jessica Lessin noted just after the acquisition. With a sensor on board that could detect 3D spaces, Apple could enhance its indoor mapping efforts, which is why it bought WiFiSLAM in the first place. Knowing where people are when inside is the next frontier for mapping, and Apple’s already working on efforts like iBeacons that will mesh nicely with a better knowledge of how interior spaces are constructed.

There are also applications related to photography that could be interesting. Computer vision companies like Obvious Engineering and its ‘3D photo’ app Seene are already showing us what photographs could look like in the future. Seene uses small camera movements, done by hand, to zip together a depth map, but imagine a sensor that was accurate enough to create a 3D image of a scene without any camera movement at all.

Apple has filed patents for a ‘multi-camera’ 3D system and the possibilities are intriguing. With a simple snap of the shutter you could grab the moment as it really was, rather than in flat old 2D.

Apple TV

This doesn’t rule out the possibility that Apple will use technology acquired in the PrimeSense deal to create a gesture-recognition interface for their TV efforts, though. Remember, Apple often makes acquisitions that have both near-term applications and uses down the road.

Indeed, Apple has also been filing patents around 3D gesture tracking and interfaces for years that point to them at least pulling the thread on this kind of thing. The technology that Primesense developed for Capri, and whatever its new sensor is, would likely work just as well in a small set top box with a camera and sensor array as it would in a smartphone.

Sensorama

It’s becoming clear that perceptive computing — devices that are aware of us and their surroundings — is going to be the next big thing in portables. The things we carry with us are getting more ways to gather and interpret data and being able to perceive and leverage 3D space is the hurdle that many major mobile companies have chosen to leap next.

Qualcomm, a company that makes an enormous amount of radio and silicon parts for phones like the iPhone and Samsung Galaxy devices, bought GestureTek back in 2011. Besides 3DV and PrimeSense, it was one of the other big patent-holders related to gesture recognition technology. Everyone in mobile, it seems, knows that this kind of tech is table stakes for the next generation of computers.

And now Apple has a nice chunk of that pie in PrimeSense. We’ll just have to wait to see exactly how they leverage it.