Statistics are everywhere these days, and it’s difficult to know which ones to take seriously. Occasionally, a “report” comes out that’s so misleading it presents a teachable moment on how to spot a bad statistic. Last week, Twitter made headlines for claims that users who are exposed to their political advertisements, in the form of promoted tweets, are about twice as likely to visit a campaign donation page than the “average” Internet user. However, Twitter users are also more likely to be from a educated voting demographic and, more importantly, prior research on these kinds of non-randomized advertising campaigns show that the results can be exaggerated by as much as 2000%. Moreover, using percentages, rather than actual numbers, is a well-known technique in disguising poor results (i.e. if only 1 in a million users normally visit a website, and the ad gets one more person to visit, its “doubled” the visitors).

Let’s break it down:

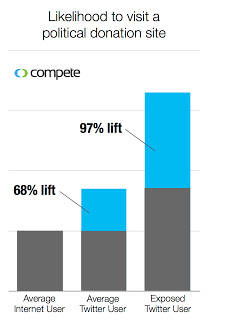

Twitter claims that “political tweets drive donations” with a graphic that shows Twitter users are 68% more likely to visit a political donation site than the “average” Internet user, and 97% more likely if they see a political advertisement, in the form of a promoted Tweet, in their feed. “This means Tweets don’t just drive Twitter users to political sites, Tweets drive people to these sites with a greater intent to donate,” explains the blog post.

The biggest problem with this claim is that it violates a fundamental rule of Statistics 101: correlation doesn’t equal causation. On any given day, for instance, there could be an unknown news story about a candidate that drives people to donate, and if a campaign coincidentally advertised on the same day, we wouldn’t know if it was the advertisement or the news that caused a spike in donations.

The biggest problem with this claim is that it violates a fundamental rule of Statistics 101: correlation doesn’t equal causation. On any given day, for instance, there could be an unknown news story about a candidate that drives people to donate, and if a campaign coincidentally advertised on the same day, we wouldn’t know if it was the advertisement or the news that caused a spike in donations.

A much more accurate way to assess causality is to randomly expose a portion of users to an advertisement and see if they behave any differently. Indeed, a team of Yahoo researchers found that brands which don’t experimentally assign an advertisement to a small portion of users can be misled into believing that an ad is 200 times more effective than it actually is [pdf].

A online advertising campaign, Yahoo finds, will bump searches for a particular brand about 5% (as opposed to the 1,198% boost that a correlational study would have found). Twitter is claiming a 97% boost–which should be a red flag. The fact that Twitter hasn’t been forthcoming with both percentages and the sample size should be another red flag.

Additionally, we don’t know who saw the ads or when they saw them. If advertisers are targeting certain users based on their likelyhood to vote, then of course these users are more likely than the typical Twitter user to visit a politician’s donation page. Furthermore, when did the advertisements run? If the ads were for Mitt Romney, and they were run after his widely praised presidential debate performance, then visits to his website would naturally have been higher anyways. And would these users have been likely to visit the donation page anyways, and just happened to go through Twitter because it was convenient?

The researchers discovered that typical user behavior swings wildly from day to day. This means that we don’t know if an uptick in traffic was due to an ad or some random variation in Internet behavior. Only showing the ad, on the same day to a smaller set of similar users, can get us a more accurate assessment.

“we show that the assumption of common trends in usage between exposed and unexposed users that underlie observational methods fail to hold for a variety of online dependent measures (brand-relevant keyword searches, page views, account sign-ups) and that ignoring this failed assumption leads to massive overestimates of advertising causal effects.”

All of these unanswered questions are important, because, at least in terms of the Yahoo study, it means the difference between thinking an ad boosted interest 1,198%, versus the actual 5%.

This isn’t to say that social media companies can’t do good research. Facebook helped with an experimental study, published in the prestigious journal Nature which found that Facebook quadruples the power of a campaign message.

Twitter easily has the capacity to do more accurate and honest research. We’ll hold their feet to the fire until they do. In the meantime, use these tips to guard yourself against a world filled with misleading statistics.