We’ve seen some interesting developments lately in the fields of robotics and computer vision. They’re not as academic as you’d expect: enormous tech successes like the Roomba and Kinect have relied as much on clever algorithms and software development as they have on marketing and retail placement. So what’s next for our increasingly intelligent cameras, webcams, TVs, and phones?

I spoke with Dr. Anthony Hoogs, head of computer vision research at Kitware, a company that’s a frequent partner of DARPA, NIH, and other acronyms you’d probably recognize.We discussed what one might reasonably expect from the next few years of advances in this growing field.

Kitware is a member of what we might reasonably call the third party in tech, one not often in the spotlight. Hoogs’s research division relies on government contracts and DARPA grants. We tend to cover privately-funded companies and products, with venture backing or corporate R&D budgets, which are often more high-profile.

Kitware is a member of what we might reasonably call the third party in tech, one not often in the spotlight. Hoogs’s research division relies on government contracts and DARPA grants. We tend to cover privately-funded companies and products, with venture backing or corporate R&D budgets, which are often more high-profile.

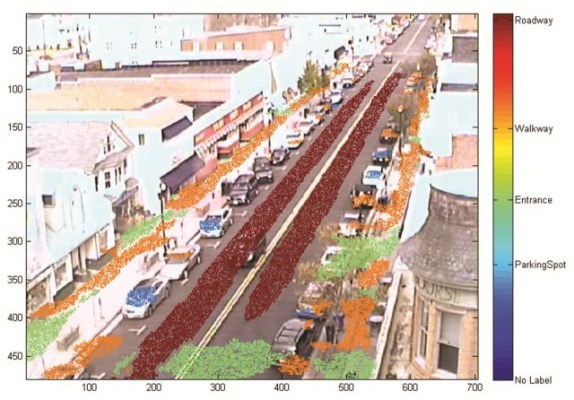

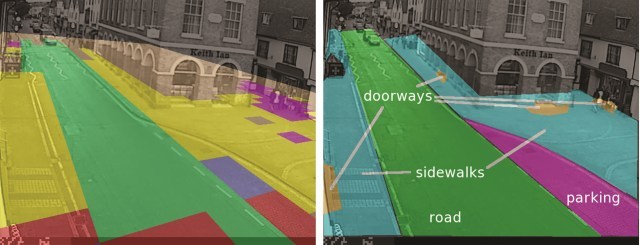

We’ve written before about the necessity of making sense of all the data being produced on the battlefield, what with cameras in every platoon, on every vehicle, and looking down from every aircraft. And then there’s the enormous amount of footage produced by domestic surveillance: security cameras private and public, traffic cameras, and the like. The amount of media produced by all these devices and networks is far too great to be effectively monitored by humans. That’s where Kitware comes in.

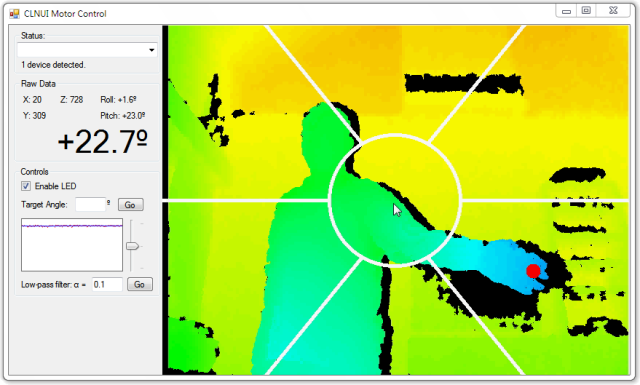

The next step in computer vision, Dr. Hoogs says, is what they’re working on: behavior analysis. Just as something like the Kinect needs to distinguish between a reach for the chip bag and any number of gestures, in surveillance footage it needs to be determined whether something is interesting or not. “Interesting” is an incredibly complex notion, however, not nearly as simple as setting thresholds on movement and object shape.

What Kitware is working on for military and surveillance purposes, however, would be equally at home in our own devices. Reducing thousands of hours of security footage to a few minutes of relevant footage is only one way to apply the algorithms and software they’re making. Allowing the analysis to happen in real time is the breakthrough that needs to happen in order to bring it to the living room. I asked whether better and more prevalent image sensors had made this easier, but he feels that the main catalyst has actually been better processors. I should have known: more sensors means more data, but not necessarily useful data. In the meantime, algorithms already effective at lower fidelity can be run faster and more frequently.

This has already hit things like point and shoot cameras, which are struggling to apply it to useful features and end up just adding more and more face-detection numbers. But the potential is huge. The end result is that every camera will effectively become a robot with situational awareness, capable of tracking and classifying every object in its vicinity, from a waving hand or smiling face to stress on a beam or an improperly parked vehicle.

On that subject, privacy becomes an issue. Until now, Kitware has largely relied on public databases for its research: images and video where legality is established. But as I wrote in Surveillant Society, the law and public dogma are things that consistently lag behind technology, and this is no exception. Should home security cameras maintain an off-site database of “trusted” faces? Should neighborhood cameras record frequent comers and goers, but flag strange people and vehicles? And will people act differently when they know their TV is “watching” them? It’s a complicated issue, and luckily one Dr. Hoogs gets to steer clear of. Their job is enabling the technology, not applying it prudently.

Kitware also releases much of its work publicly and it is widely used; other companies like PrimeSense are also hoping to become the de facto standard for emerging interfaces like depth control and object recognition.

I asked what we could realistically expect in real products over the next year or two. Dr. Hoogs feels that virtualized and augmented reality will be the next wave of consumer products to use it. Your phone already knows where it is, which direction it’s facing, what businesses are nearby, and so on. Early entries like Google Goggles and Layar show potential, but the processing and infrastructure both need upgrading before it hits the big time.

The big push comes when companies need to close the gap between the academic findings and a product. That means putting an end to development and features, something pure researchers can have trouble with (“it’s not finished!”). But as Microsoft showed with the Kinect, and many other companies are showing with intelligent image manipulation tech, the possibilities for product are as great as the possibilities of simply advancing the field.